Bias audits for geolocation-based algorithms

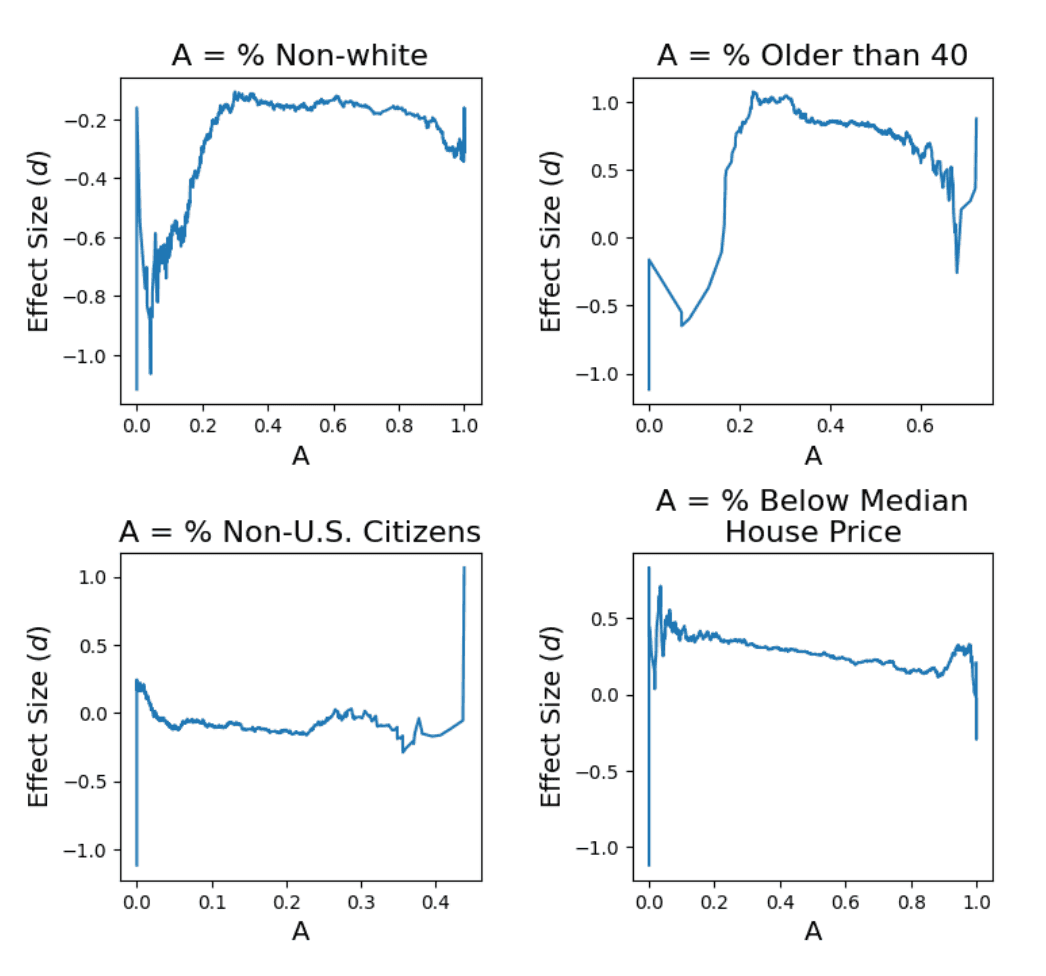

This study presents a methodology for measuring social bias by ride-hail companies on user attributes legally protected against discrimination such as race. Looking at a large data set of ride-hail trips in Chicago, the research found "a significant disparate impact in fare pricing of neighborhoods due to AI bias learned from ridehailing utilization patterns associated with demographic attributes."

While these findings are concerning, the study also offered a way for cities to push back.—real-time auditing of geolocation-based algorithms (in this case, dynamic pricing of ride-hail trips). This points towards a future where cities require mobility operators to report transaction data in real-time (and indeed, this study was based on periodically reported data provided to the City of Chicago by ridehail operators)—and this data is subjected to automated, ongoing audits that suss out whether geolocation-based algorithms are simply replicating old biases.

..png)