First-person ontologies

From top-down to bottom-up constructs.

How likely? How soon? What impact?

Ontologies are the basic set of structures that define knowledge in a field—the people, places, things, events, and relationships between them. In urban planning, much of how we describe the world originated in the modern period, when the Cartesian logic of remote sensing—from planes, satellites, and instrumented networks—dominated. This frame reigned because it served planners and architects, and their clients, well. But we also lacked the technological capability to zoom in or shift our point of view to the ground, where more subjective impressions hold sway. This disconnect is well-known, but closing it has been seen as costly and risky.

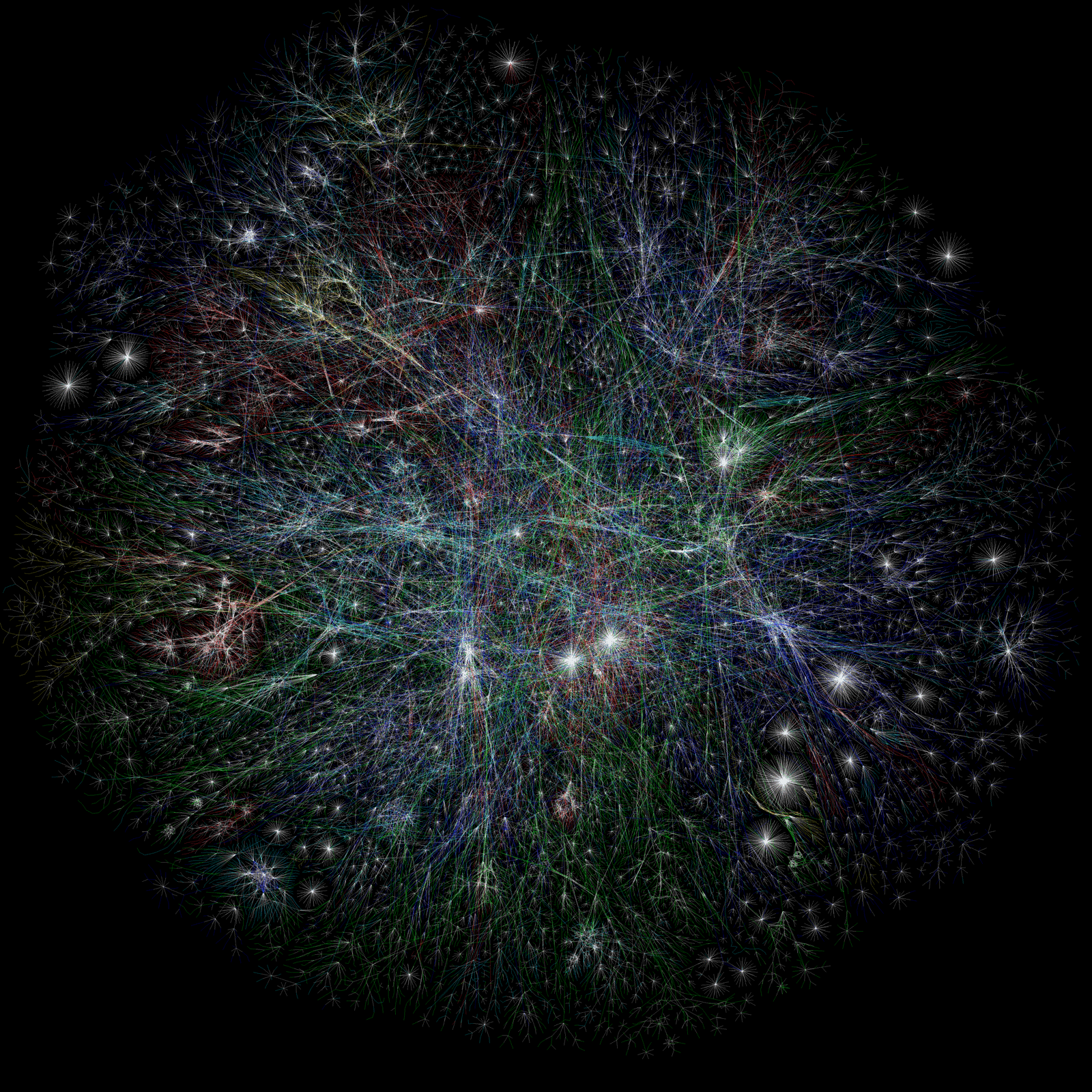

The gap between our big maps and our granular lived experience of cities is closing. As new tools for sensing and mapping combine, along with powerful simulation models, we can generate living images that show in great detail and accuracy what has happened, is happening, and could happen in cities. This creates a new viewpoint—a first-person viewpoint that more closely corresponds to our own. As these tools spread, they will trigger an upheaval in urban ontologies, as flaws in our old ways of describing entities and relationships are revealed, and opportunities to use alternative schemas are seized—often by those who have been excluded from earlier discourse. As the basic building blocks by which we organize our knowledge of cities shift, a first-person point of view may dominate and transform how we conceive, design, and disseminate visions for cities.

Signals

Signals are evidence of possible futures found in the world today—technologies, products, services, and behaviors that we expect are already here but could become more widespread tomorrow.

..png)